CP2K on Zen3 CPUs

December 3, 2025 in Benchmarks by Christian Drotleff5 minutes

Benchmarking the performance and scalability of CP2K on MOGON NHR / KI.

CP2K scalability on HPC systems

As a quantum chemistry and solid state physics simulation tool, CP2K provides a framework for many essential computational tasks for chemists and physicists alike. Since such simulations can be very computationally intensive, they represent a great opportunity for the usage of a HPC cluster like MONGON NHR or MOGON KI. As CP2K is known to scale relatively well across even a large number of nodes and uses both the Message Parsing Interface (MPI) and OpenMP, it is also a great tool for use in benchmarking.

Because of this, some benchmarks were run on MOGON NHR to examine its performance and get a feel for scalability of different MPI tasks / OpenMP thread configurations.

Benchmark setup

Hardware and software configuration

All benchmarks were run on the MOGON NHR cluster, on the parallel partition. This partition provides dual socket compute nodes with AMD EPYC 7713 CPUs, with 64 cores each, for a total of 128 cores per node. The partition also includes various memory configurations, with the lowest one being 256 GB. In order to make more nodes available for testing and since CP2K is usually compute-constrained, 240GB per node were used for the computation. Message parsing is conducted over an 100 GB/s Infiniband network.

The CP2K version in use was CP2K 2023.1 FOSS PSMP (CPU compute variant with both MPI and OpenMP). For the purposes of this benchmark, the pre-installed CP2K Easybuild module was used. All compute nodes use AlmaLinux 8.7.

Benchmark choice

The H2O-DFT-LS benchmark provided on cp2k.org was chosen. The benchmark consists of a single-point energy calculation using an algorithm modeled after the Density Functional Theory (DFT). The data has a spatial extent of 39 cubic Angstroms with 6144 atoms and 2048 molecules. As the algorithm is based on an iteration of the density matrix, it has a linear scaling cost, which is computationally much more favorable on large systems than standard DFT.

Job configuration

Since MOGON NHR uses SLURM as a job scheduler, jobs were configured as sbatch-scripts:

#!/bin/bash

#-----------------------------------------------------------------

# Example SLURM job script to run CP2K with OMP and MPI on MOGON NHR.

#

# https://mogonwiki.zdv.uni-mainz.de/docs/scientific-computing/applications/cp2k/

# https://docs.archer2.ac.uk/research-software/cp2k/

#-----------------------------------------------------------------

#SBATCH -J cp2k_benchmark # Job name

#SBATCH -o ../out/psmp_N064_OMP008_MPI016.%j.out # stdout output file (%j expands to jobId)

#SBATCH --nodes=64 # Total number of nodes (2x64 cores/node)

#SBATCH --ntasks-per-node=16 # MPI tasks per node

#SBATCH --cpus-per-task=8 # threads per MPI task

#SBATCH --mem=240G # Memory per node

#SBATCH --time=00:30:00 # Run time (hh:mm:ss)

#SBATCH -p parallel # Parition name

# Ensure OMP_NUM_THREADS is consistent with cpus-per-task above

export OMP_NUM_THREADS=8

export OMP_PLACES=cores

# Load all necessary modules if needed (these are examples)

# Loading modules in the script ensures a consistent environment.

module purge

module use /apps/easybuild/2023/core/modules/all

module load chem/CP2K/2023.1-foss-2023a

# Launch the executable

srun cp2k.psmp -i ../h20-dft-ls-4.inpScripts using different configurations with various amount of nodes and different distributions of MPI tasks and OMP threads were created. For optimal resource usage, all configurations followed the rule that:

To evaluate how benchmark runs with more or less MPI tasks and OMP threads performed respectively, several reasonable configurations were tested:

| Configuration options | A | B | C | D | E | |

|---|---|---|---|---|---|---|

| MPI tasks per node | 8 | 16 | 32 | 64 | 128 | |

| OMP Threads per task | 16 | 8 | 4 | 2 | 1 |

The environment variables OMP_NUM_THREADS and OMP_PLACES were exported to make sure that the configuration is correctly applied by all components of the stack. Each configuration was then tested on 4, 8, 16, 32, 64, 128 and 256 nodes. When combined with the number of tasks and threads, this covers a total of:

In practice, however, two runs were omitted due to them being highly unfavorable configurations that would result in excessive resource usage.

Benchmark results

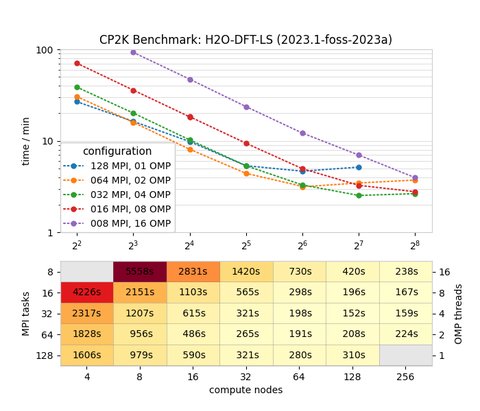

Plotting the results, it becomes apparent that the speedup of all configurations scales relatively linearly up until 16 compute nodes. After that, as the overhead of intra-node, and especially inter-node communication increases, the efficiency starts to drop, until no further speedup can be achieved. When adding even more nodes, we can even see a decrease in performance in these configurations.

MPI vs OMP

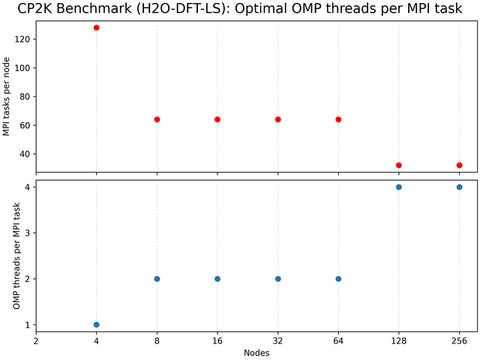

Benchmark runs scaled very differently depending on how many MPI tasks per node were used, and how many OpenMP tasks they contained. For a very low amount of nodes (up to 4), it could be observed that using MPI exclusively yielded the fastest results. This is likely the case since MPI allows for tasks to be distributed between the nodes in a more optimal way.

On the other hand, the number of tasks ( $ 4 \times 128 $ ) is not yet large enough to cause bottlenecks, especially when it comes to networking. As we increase the number of nodes, and therefore the number of tasks, the limited networking bandwidth becomes more and more of a problem. When reaching 256 nodes, the fastest configuration is now 16 / 32 MPI tasks per node.

Because of this relationship, the ideal amount of OMP threads per MPI task starts at 1 and increases to 4 (or 8) for 256 nodes.

Comparability

While the results give an overview on how the DFT algorithm runs on Mogon NHR in CP2K, they do not necessarily give an accurate assessment of the total performance of the cluster. This is due to the fact that the AVX512 instruction is not supported on EPYC 7713 CPUs, which, under some circumstances, is highly beneficial for matrix-multiplication heavy tasks performed by the CP2K diagonalization library ScaLAPACK. The exact decrease in performance that the omission of support for AVX512 has, is, however, difficult to judge. Therefore, the benchmark results presented in this article can not easily be compared with results of the same benchmark on other clusters, especially those with AVX512 support.